Our developers are commonly required to work and expand or integrate within existing systems or several connected systems. From time to time, our team members need to either create or re-factor selected modules of a client’s solution.

In this project, our main focus was to enhance the workings of event-driven analytics tools so that they could support rapid prototyping. The client’s customer base was growing, but the existing prototype code was not performing well enough to crunch TBs of data. Following our analysis, we recommended greater parallelisation of the event-processing engine.

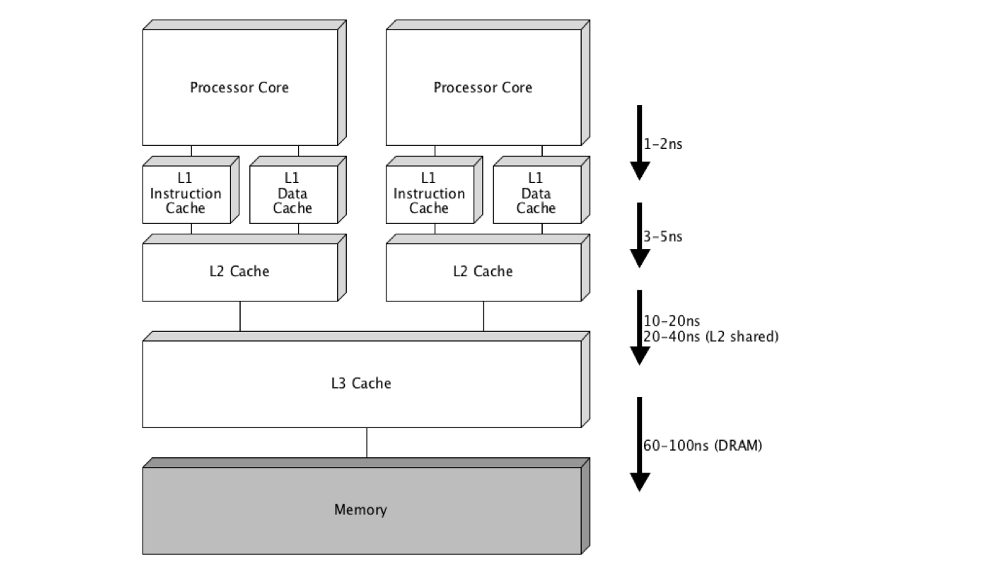

The client had High-Performance Computing machines available on site which could be dedicated to data processing. However, creating effective programs which could take advantage of the multi-core architectures of HPC computers differs from standard run-of-the-mill coding techniques.

The created solution also took advantage of the Akka Streams framework and Apache Kafka queues in order to achieve concurrent processing of events. A number of low-level challenges needed to be resolved, such as: (i) limiting the number of context switches to avoid L2 cache reloading, (ii) splitting the events stream into multiple separate sub-streams, (iii) caching pre-computed results, and (iv) introducing non-locking concurrent objects’ collections. Those re-factorisations achieved processing speed-up between four and five times of the original code. This allowed our customer to swiftly release new functionalities to the market and increase their client base.

This exciting project exposed us to the unique features of High-Performance Computing and allowed us to greatly expand our knowledge and expertise of parallel computing. A presentation summarising our findings can be found here.