1.INTRODUCTION

Cloud computing has become a very widespread phenomenon in daily lives, especially if we consider how accepted smartphones are. A typical phone application is heavily depended on infrastructure and remote processing capabilities Clouds deliver. Also, in desktop computer’s programs area, a significant number of applications have been moved into Software-as-a-Service model, where desktop serves only as a client, but actual processing in done on a remote Cloud, e.g.: business subscriptions for Microsoft Office 365 offer access to cloud-hosted versions of Office programs. Therefore, the current world is more and more reliant on Cloud computing.

A variety of vendors, services and business models have created an extremely complex environment. The latest developments in Cloud technologies gravitate towards federated, inter-Cloud cooperative models and, therefore, already very complex solutions are destined to become even more complex. It’s is also a very competitive market. A recent rise in Big Data systems fuelled a growth in demand of cheap computing power; however, a number of vendors joined and market offering computing services has greatly expanded Prices have also been driven down and, as of 24 July 2018, the cost of renting a general-use instance of 16-core machine with 64GB memory was 80 cents per hour (data from aws.amazon.com/ec2/pricing website).

The main difference between a Cluster and a Cloud is business model and access. Clusters were traditionally available only to a very limited number of institutions and corporations, who pooled their resources in order to get advantage of scale of computing. Public generally did not have access to computing capabilities of Clusters.

Because access to Clusters was very restricted and incoming workload was planned well in advance, it was practical to centralise task scheduling and system management functions. Therefore, a centralised architecture was generally used a base for Cluster management software, such as SLURM [61], Univa Grid Engine [16], Google’s Borg [44], etc.

Cluster schedulers evolved from a sequential processing of allocation decisions in a loop (e.g. SLURM) towards more parallel solutions [44]:

- Statically partitioned scheduling in which the exclusive sets of machines in a Cluster are dedicated to certain types of workload and the resulting specialised partitions are managed separately. The example of such design is Microsoft’s Quincy [39]. The main criticism of the static partitioning is inflexibility – a homologous workload might result in a part of scheduler being relatively idle, while other nodes are very active.

- Two-level architecture in which a Cluster is partitioned dynamically by a central coordinator. The actual task allocations take place at the second level of architecture in one of the specialised schedulers. The first two-level scheduler was Mesos [20] in which resources are distributed to the frameworks in the form of ‘offers’ made by Mesos Master. Scheduling frameworks have autonomy in deciding which resources to accept and which tasks to run on them. The mechanism used causes resources to remain locked while the resources offer is being examined by a specialised scheduler. This means the benefits from parallelisation are limited due to pessimistic locking. In addition, the schedulers do not coordinate between each other and must rely on a centralised coordinator to make them offers, which further restricts their visibility of the available resources in the Cluster.

- Shared state is the most recent design, used in Google’s Borg [44] and Microsoft’s Apollo [7]. The concept behind this architecture is to deploy several schedulers working in parallel. The scheduler instances are using a shared object with a state of available resources; however, the resource offers are not locked during scheduling decisions (optimistic concurrency control). In the case of a conflict, when two or more schedulers allocated jobs to the same resources, all involved jobs are returned to the jobs queue and scheduling is re-tried.

This research focuses on the next type of scheduler architecture, which expands on the shared state scheduler concept where the scheduling logic is processed in parallel but still within a specific entity. The key novelty of the presented design is the distribution of the scheduling logic’s processing to Cluster nodes themselves – this approach eliminates the requirement that all allocation decisions have to be synchronised and aggregated into a single state. Such a solution should scale beyond the limits of the currently deployed Clusters orchestration software while preserving or bettering the workload throughput and quality of centralised scheduler’s allocations. The key objective of this research was to experimentally evaluate performance advances emerging out of the designed solution.

One of the most developed and published Cluster managers is Google’s Borg system which represents the shared state scheduler architecture. When allocating a task, Borg’s scheduler is scoring a set of available nodes (best-fit algorithm) and selects the most feasible machine for this task. The central module of Borg architecture is BorgMaster, which maintains an in-memory copy of most of the state of the cell. Each machine in a cell is running BorgLet, an agent process responsible for starting and stopping tasks and also restarting them if they fail. A single BorgMaster controller is able to manage a cell of more than 12k machines (highest value is not specified). Google’s engineers achieved this impressive result by a number of optimisations and, so far, they’ve managed to eliminate or work around virtually every limitation they have approached [51]. Nevertheless, a centralised architecture is a limitation within a current design of a Cloud.

One might also ask a fundamental question – do Cloud systems really require more inter-connected nodes in one cell? Computing power of 12k machines working together is already a very considerable force and, barring few exceptions, it’s highly unlikely that an application would require such a processing power. However, in recent years, software development is trending towards Big Data systems. Big Data systems are characterised by a high degree of parallelism. A typical Big Data system design is based on a distributed file system, where nodes have dual function of storing data as well as processing it. One process of such system might need to crunch thousands of TBs of data split across thousands of nodes. Even with ideal allocation of Big Data tasks, where every task is processing data only available locally (i.e. locality optimisation), a single node would still need to process GBs of data locally. Therefore, the answer to the above question is yes – it’s highly likely that we will require larger computing cells in the near future.

Therefore, if centralised architecture has its ultimate scalability limits, we shall consider alternative approaches. In this paper we present a working prototype of decentralised Cloud manager – Multi-Agent System Balancer (MASB), which relies on a network of software agents to organically distribute and manage good system load. MASB prototype has been built on top of Accurate Google Cloud Simulator (AGOCS) framework [48] and such all research and development has been continuously tested on a real-world workload traces from the Google Cluster Data (GCD) project [19].

The main novel aspects of this approach were to co-operatively schedule the incoming tasks by a network of software agents, which allows running programs to be offloaded to alternative system nodes on the fly, in addition to designing algorithms capable of proactively managing a workload in such a dynamic environment. Thus, this research breaks with the concept that the execution of a task in a cluster is immovable or unstoppable, and instead examines the available technology to implement such a strategy.

Furthermore, moving away from the concept of the centralised load balancing and offloading the actual scheduling logic to the nodes themselves resulted in more time available for the execution of allocation routines. As such, more sophisticated algorithms could be deployed, such as metaheuristic methods. Since none of the commercially available cluster schedulers realise such features, the objective of this research was to implement a working prototype for the Cloud load balancer, and to evaluate their performance advances emerging out of the designed solution.

The remaining of this paper is organised as follows. Section 2 provides introduction to agent systems and a brief of research history how they have been used to schedule tasks. Section 3 describes MASB’s design principles, defines scope of project and lists all main used technologies. Section 4 has details about MASB architecture and its objects. Section 5 describes in detail the allocation negotiation protocol that is used between system components to allocate new task or re-allocate existing task on a node. Section 6 present allocation score functions, which are modelling distribution of tasks and their allocations across nodes through tasks’ lifecycles. Section 7 specifies experiments, which were performed as a part of his research and provides a discussion on achieved results. Section 8 describes competitive solutions. In section 9, important optimisations and lessons learnt are presented.

2.LOAD BALANCING WITH AGENTS

Agent technologies can be dated back to 1992 [42], at which point it was predicted that intelligent agent would become the next mainstream computing paradigm. Agents were described as the most important step in software engineering, representing a revolution in software [18]. Since its inception, the field of multi-agent systems has experienced an impressive evolution, and today it is an established and vibrant field in computer studies. The software agents research field spans many disciplines, including mathematics, logic, game theory, cognitive psychology, sociology, organisational science, economics, philosophy, and so on [54]. Agents are considered to be a viable solution for large-scale systems, for example through spam-filtering and traffic light control [9], or by managing an electricity gird [8].

It is difficult to argue for any precise definition of an agent, with the research literature seeming to suggest that there are four key properties of an Agent [13][15][56], namely:

- Autonomy when allowing agents to operate without direct human intervention;

- Social ability when agents communicate and interact with other agents;

- Reactivity when agents actively perceive their environment (physical or digital) and act on its changes;

- Proactiveness when agents not only dynamically respond to changes in environments but are also able to take initiative and exhibit goal-oriented behaviour as well as real-time communications.

A software agent it is generally defined as being of acting independently of its user in order to accomplish tasks on behalf of its user [36]. An agent can be described as a being which is supposed to act intelligently according to environmental changes and the user’s input [17].

Software agents are found across many computer science disciplines, including AI, decentralised systems, self-organising systems, load balancing and expert systems [18][30]. Previous research has also shown that by deploying agents it is possible to achieve good global system performance [34] and attain dynamic adaptation capability [23].

Agents were also found to be useful for the performance monitoring of distributed systems [10]. Several additional benefits may also be achieved, including more cost-effective resource planning [11], a reduction of network traffic [32], the autonomous activities of the agents [17], and decentralised network management [59]. Multi-agent systems were also successfully used for forecasting demand and then adapting the charging schedule for electric cars [58], and also to effectively coordinate emergency services during crisis [37]. [40] presents an agent-based framework to model procurement operations in India. The most state-of-art research generally focuses on negotiation protocols and communications [28][31][53][57].

Agent-based systems generally rely on decentralised architecture [22][31][46][53], considering it to be more reliable. However, those schemas require complex communication algorithms, with negotiation protocols often being required for distributed architecture to attain a good level of performance [6][57][59].

The idea of job scheduling with agents is not new; a single-machine multi-agent scheduling problem was introduced in 2003 [3][4]. Since this time, the problem has been extended and exists in several variations, such as deteriorating jobs [26], the introduction of weighted importance [35], scheduling with partial information [27], global objective functions [50], and adding jobs’ release times and deadlines [60]. A suitable taxonomy of multi-agent scheduling problems in presented in [38].

The research on workload sharing via agents has a long history, with the papers below in particular having influenced the design of the MASB:

- [43] presents a study concerning a multi-agent system in which all decision making is performed by a learning AI. The likeness of selection of a particular node for the processing of a given task depends on the past capacity of this node. The Agent’s AI uses only locally-accessible knowledge, meaning that it does not rely on information shared by other agents.

- [14] introduces Challenger, a multi-agent system, in which agents communicate with each other to share their available resources in an attempt to utilise them more fully. In Challenger, agents act as buyers and sellers in a resources marketplace, always trying to maximise their own utility. MASB follows a similar pattern, where nodes try to maximise their utilisation (via score system).

- [6] shows that cooperative negotiation between agents representing base stations in a mobile cellular network can lead to a near global optimal coverage agreement within the context of the whole cellular network. Instead of using a negotiation model of alternating offers, several possible local hypotheses are created, based on which parallel negotiations are initiated. The system commits to the best agreement found within a defined timeline. The cooperative model in which agents negotiate between themselves is the base of the distributed scheduling presented in this research.

- [23] proposes a load-balancing scheme in which a mobile agent pre-reserves resources on a target machine prior to the occurrence of the actual migration. The system also prevents excessive centralisation through the implementation of a mechanism whereby when the workload processed on a particular machine exceeds a certain threshold, this machine will attempt to offload its agents to neighbouring machines.

- [12] describes a solution in which agents representing a local grid resource uses past application performance data and iterative heuristic algorithms to predict the application’s resource usage. In order to achieve a globally-balanced workload, agents cooperate with each other using a Point-to-Point (P2P) service advertisement and discovery mechanism. Agents are organised into a hierarchy consisting of agents, coordinators and brokers, who are at the top of the entire agent hierarchy. The authors conclude that for local grid load balancing, the iterative metaheuristic algorithm is more efficient than simple algorithms such as FCFS.

- [21] details a solution built on top of the ant colony algorithm, a solution which takes its inspiration from the metaphor of real ants searching for food. ‘Ants’ are software objects that can move between nodes managed by agents. A move between nodes which is managed by the same agent is less costly. Ants explore paths between nodes, marking them with different pheromone strength. Whenever an Ant visits a node, the agent managing it saves the recorded tour and updates its own database. Ants who subsequently visit this node read its current knowledge, meaning they have the potential to exchange information in this environment, which adds to the predictability of the whole solution.

3.MASB DESIGN PRINCIPLES

The MASB project has been developed over several years, during which time it has undergone many changes in terms of both the technology used and the design of the architecture. This has included, for example, migration from Java to Scala, the change from thread pools to an Akka Actors/Streams framework, and the introduction and use of concurrency packages and non-locking object structures. However, the main design principles have not been altered and are presented below:

- To provide a stable and robust (i.e. no single point of failure) load balancer and scheduler for a Cloud-class system;

- To efficiently reduce the cost of scaling a Cloud-class system so that it can perform in an acceptable manner on smaller clusters (where there are tens of nodes) as well on huge installations (where there are thousands of nodes);

- To provide an easy way of tuning the behaviours of a load balancer where the distribution of tasks across system nodes can be controlled.

Many other Cluster managing systems, such as Google’s Borg [51], Microsoft’s Apollo [7] and Alibaba’s Fuxi [63], were built around the concept of the immovability and unstoppability of a task’s execution. This means that once a task is started it cannot be re-allocated: it can only be stopped/killed and restarted on an alternative node.

This design is particularly well suited when there is a high task churn, as observed in Apollo or Fuxi where tasks are generally short-lived, meaning that the system’s scheduling decisions do not have a lasting impact. However, in order to support a mixed workload which features both short-lived batch jobs and long-running services, alternative solutions needed to be developed. One such solution is the resource recycling routines present in Borg wherein resources allocated to production tasks but not currently employed are used to run non-production applications [51].

MASB takes advantage of virtualisation technology features, namely Virtual Machine Live Migration (VM-LM), to dynamically re-allocate overloading tasks. VM-LM allows programs which are running to be moved to an alternative machine without stopping their execution. As a result, a new type of scheduling strategy can be created which allows for the continuous re-balancing of the cluster’s load. This feature is especially useful for long-term services which initially might not be fitted to the most suitable node, or where their required resources or constraints change. Nevertheless, this design creates a very dynamic environment in which it is insufficient to schedule a task only once. Instead, a running task has to be continuously monitored and re-allocated if the task’s current node cannot support its execution any longer.

The design of MASB relies on a number of existing tools and frameworks. The main technologies used are listed below:

- Decentralised software agents – a network of independent AI entities that can negotiate between each other and allocate Cloud workload between them. In MASB, specialised agents control nodes and manage the system workload. Due to the decentralised nature of MASB, there is no complete up-to-date system state. Instead, yet another type of agent is responsible for caching the nodes’ statistics and providing an interface whereby a set of candidate nodes which a particular task can be migrated to can be requested.

- Metaheuristic selection algorithms – while the majority of the processing of load balancing logic is done via negotiation between agents, a few system processes are handled locally. One such example is that when an agent discovers its node is overloaded, it will select a subset of its tasks which it will attempt to migrate out. This selection is performed by Tabu Search (TS) algorithm.

- VM-LM which allows the transfer of a running application within the Virtual Machine (VM) instance to an alternative node without stopping its execution. The vendors’ strategy is to implement mixed production and low-priority jobs on a single machine. While production jobs are idler, low-priority jobs consume the nodes’ resources. However, when production job resources need to be increased, the low-priority jobs are killed. The non-production jobs in Google Cluster [51] and the spot-instances in Amazon EC2 [52] use such an approach. MASB takes advantage of VM-LM to offload tasks without stopping their execution, collecting information about tasks in order to estimate the VM-LM cost of such a task.

- Functional programming language Scala and accompanying libraries – due to the decentralised design and loose coupling between the system’s components, the implementation language is of secondary importance. However, load balancing algorithms require a significant amount of tuning, especially if the Cloud is designed to have a high utilisation of available resources. This would mean that resource waste is low, and therefore the cost-per-job execution is also low. Due to the complexity of inner-system relations and dependencies, a high-fidelity simulation environment is necessary to evaluate the expected performance of a given configuration and implemented changes before is deployed to a production system, e.g. the FauxMaster simulator used by Google Engineers [51]. In this implementation, Akka Actors framework was selected as the core parallelisation technology.

4.MASB ARCHITECTURE

The experiments in [49] that used a centralised load balancer based on metaheuristic algorithms demonstrated that, due to the high overheads of these algorithms, a scheduling strategy implemented on a single machine is highly unlikely to efficiently manage a large number of tasks. Therefore, MASB has been built around the concept of a decentralised load balancing architecture, an architecture which could scale well beyond the limits of a centralised scheduler.

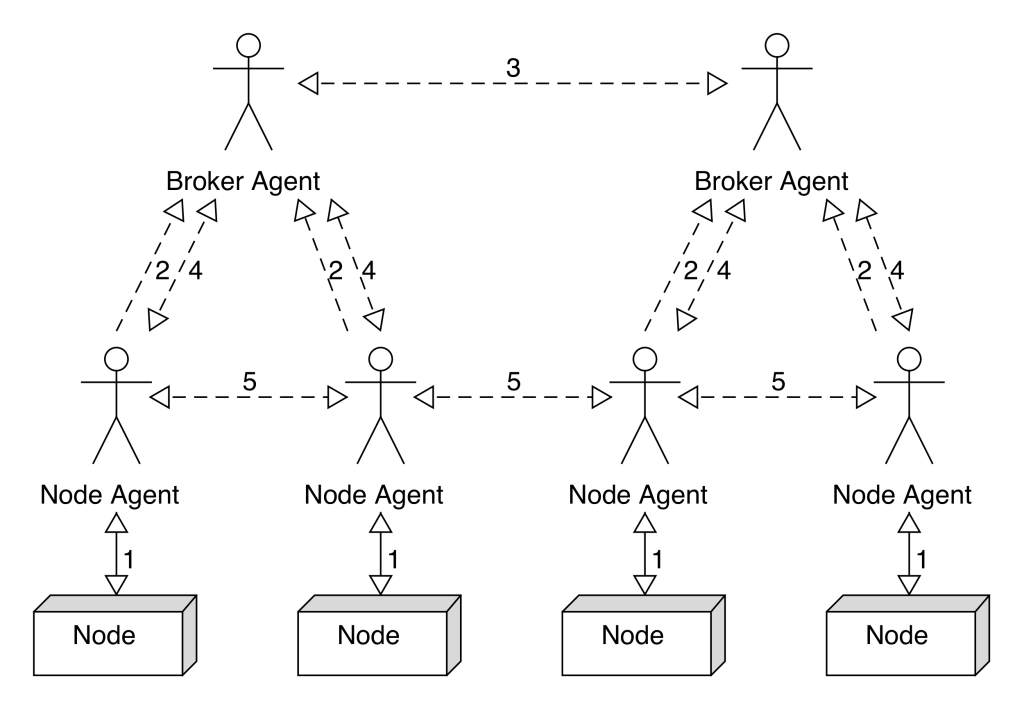

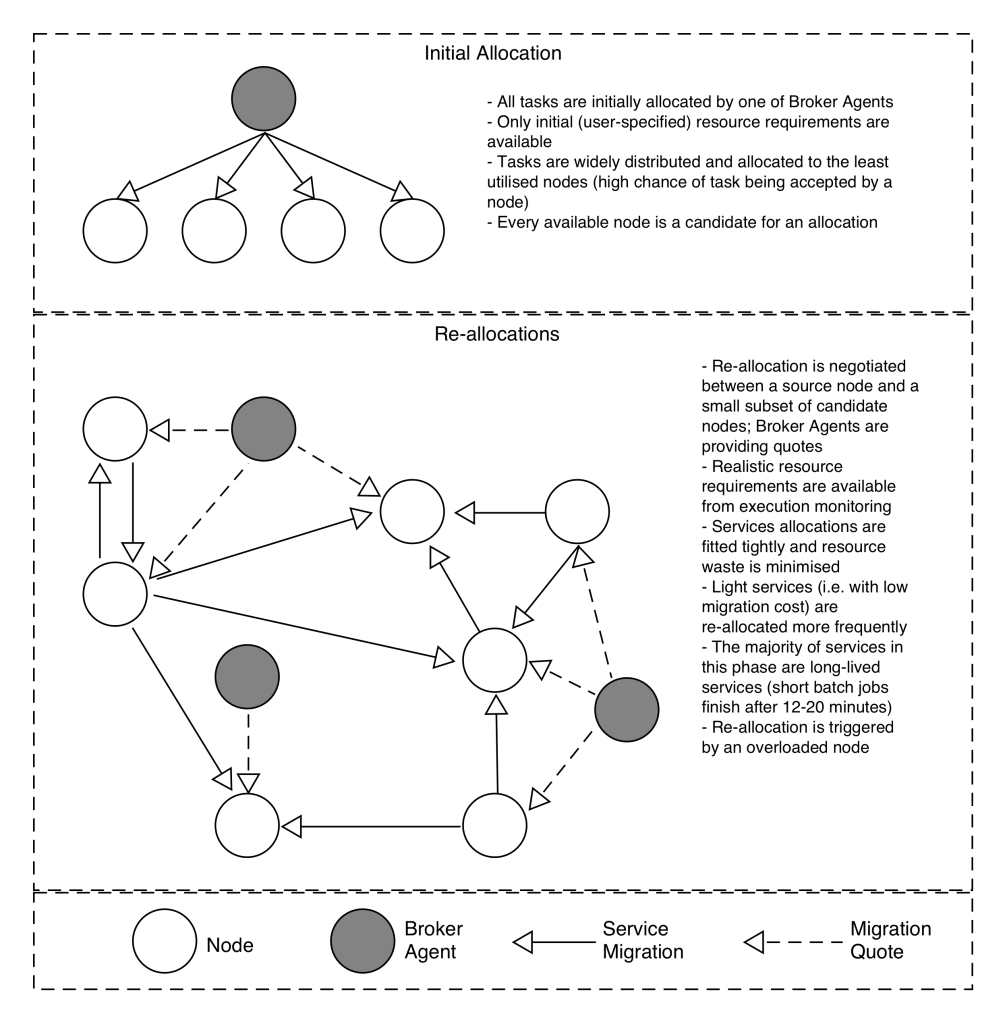

Figure 1 visualises the communications’ flow within MASB system:

Figure 1. MASB communications’ flow

MASB relies on a network of software agents to organically distribute and manage the sizeable system load. All communication between the agents is performed via a specialised stateless P2P protocol which promotes loose coupling. Two types of agents are deployed: Node Agent (NA) and Broker Agent (BA). NAs are supervising system nodes, are responsible for keeping those nodes stable. NAs actively monitor the used resources on their nodes (1) and periodically forward this information to the subnetwork of BAs (2). BAs continuously exchange nodes’ load information between themselves (3) and, therefore, effectively cache the state of the computing cell.

NA contains an AI module which is based on a metaheuristic algorithm TS. It manages a workload on a node. When an NA detects that its node is overloaded, it will attempt to find an alternative node for overloading tasks with the help of Service Allocation Negotiation (SAN) protocol (the details can be found in section 5). The first step of SAN communication is to retrieve alternative nodes from BA (4). BAs provide a query-mechanism for NAs, which returns a set of candidate nodes for the migrations of tasks. However, because the information found in BAs is assumed to be outdated, once the NA completes this step, it communicates directly with their NAs so as to re-allocate this task (5).

The following two subsections describe the types of agents noted and detail their responsibilities. The annotated arrows 2 to 5 in Figure 1 correspond to inter-agent communications – messages that are exchanged within the system are detailed in subsection 4.3.

4.1.NODE AGENT

Every node in the system has a dedicated instance of NA. NA continuously monitors the levels of defined resources and periodically reports the state of its node and levels of utilised resources to BAs. Should any of the monitored resources be over-allocated, NA will initialise SAN process. In addition, NA performs the following functions:

- Accept/deny task migration requests – NA listens to task migration requests, and accepts or denies them. This routine is simple, with NA projecting its resource availability with that task as follows: projected allocation of resources = current allocation of resources (existing tasks which also includes tasks being migrated out from this node) + all tasks being migrated to this node + requested task (from request). If the projected resources do not overflow the node, the task is accepted and the migration process is initiated. The source node does not relinquish ownership of the task while it is being re-allocated, meaning that source node is regarded as a primary supplier of the service until the migration process successfully completes. It should be noted that during task migration, its required resources are allocated twice, to both the source node and the target node.

- Task migration – after accepting the task migration request, NA immediately starts listening for incoming VM-LM. In order to perform task migration, NA must have access to the administrative functions of VM and be able to initiate VM-LM to another node. This functionality can be either implemented by the calls of the VM manager API or by executing the command line command. This process may vary considerably per VM vendor.

4.2.BROKER AGENT

BA is responsible for storing and maintaining information about nodes’ online status and their available resources. BA is a separate process which can coexist with NA on the same node since its operations are not computing-intensive. BA has two main purposes in the system. These are outlined below:

- Nodes resources utilisation database – NA periodically reports to its BA about the state of its node and available resources. BA stores all this data and can query them on demand. Every node entry is additionally stored with its timestamp, showing how long ago the data were updated. It has additional protection against the node silently going offline, for example through hardware malfunction or the network becoming unreachable, in that if this entry is not updated for five minutes, the node is assumed to be offline and entry is removed. This means that it will not be returned as the candidate node.

- Evaluating candidate nodes for a task migration – BA listens for requests and computes a list of candidate nodes for a task migration. In order to create a list of candidate nodes, BA retrieves nodal data from the local cache and then scores them using Allocation Scoring Function. BA scores the future state of the system as if task migration were being carried out. After scoring all the cached nodes, BA selects a configured number of candidate nodes with the highest score and sends them back to the asking node. In this research this number was set to fifteen candidate nodes, wherein higher numbers failed to yield superior results.

4.3.MESSAGE TYPES

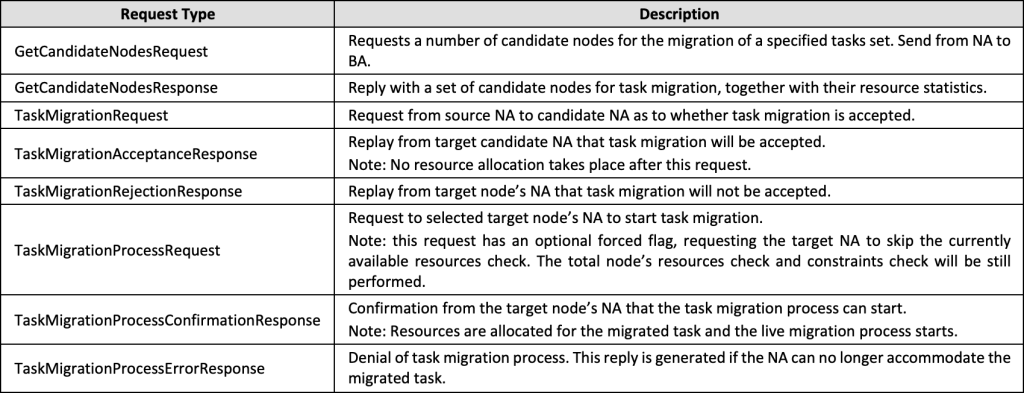

In order to avoid costly broadcasts, since broadcast packages need to be rerouted through a whole network infrastructure consuming the available bandwidth, both NA and BA always communicate P2P. Agent-to-agent interactions follow the ‘request-response’ pattern, in which each request object has one or more matching response objects. The message objects carry additional metadata such as fitness value (see formula subsection 5.1), forced migration flag, and detailed node and task information. Section 5 explains the process in which messages are exchanged, while the subsections 5.1 to 5.4 show detailed samples of such objects. In the system there are several types of requests and responses between agents outlined in Table 1 below:

Table 1. Message types

5.SERVICE ALLOCATION NEGOTIATION PROTOCOL

When NA detects its node is overloaded, it will select a task (or a set of tasks) and attempt to migrate them to an alternative node or nodes. Since SAN is asynchronous, this means a single NA can run several SAN processes in parallel. In the current implementation, NA selects a number of tasks in the first step – Select Candidate Services (SCS) – and processes their allocation in parallel.

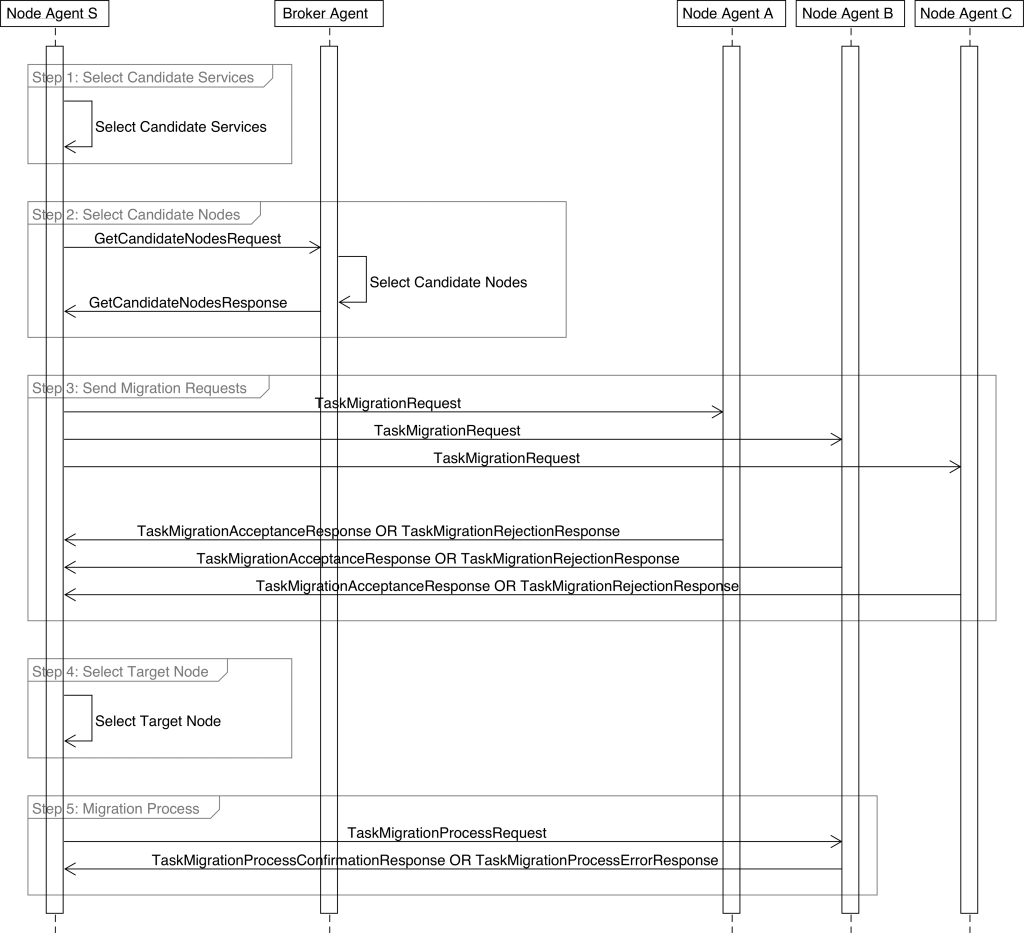

Figure 2 visualises this process – for simplicity, the chart presents the allocation negotiation of one task only.

Figure 2. Service Allocation Negotiation

SAN is a five-stage process, involving a single source node (Node Agent S), one of the system BAs and several of other nodes in the system (Node Agent A, Node Agent B and Node Agent C). When migrating-out a given task, NA at first sends a GetCandidateNodesRequest to BA to get with a set of candidate nodes where the task can potentially be migrated to. BA scores all its cached nodes and sends back the top fifteen to NA. Additionally, in order to help to avoid collisions, BA does not directly select only top candidate nodes, but instead selects them randomly from a node pool, where candidate node score is a weight, wherein higher scored nodes are selected more frequently. This design helps to avoid a situation where an identical subset of candidate nodes is repeatedly selected for a number of tasks with the same resource requirements.

Upon receiving this list, NA sends task migration requests to all of those candidate nodes (Step 3), and waits for a given time (in this case for thirty seconds) for all replies. After this time, NA evaluates all accepted task migration responses (Step 4) and orders them in relevance order (nodes with the highest score first) and then attempts to migrate a task to a target node with top score (Step 5). If target node returns an error, the source NA will pick the next target node and attempt to migrate a task there.

At each of these stages, the target node’s NA might reject task migration or return an error, for example when task migration is no longer possible because the current node’s resource utilisation levels have increased or because the node attributes no longer match the task’s constraints. Depending on a system utilisation level, such collisions might be more or less frequent. However, they are resolved at node-to-node communication level and do not impact the system performance as a whole.

In a situation where there are insufficient candidate nodes available due to the lack of free resource levels, the BA will return candidate nodes with the ‘forced migration’ flag set to true.

The algorithm’s five steps are explained in the following subsections, while the forced migrations feature is detailed in supplementary subsection 5.6 below.

5.1.STEP 1: SELECT CANDIDATE SERVICES

SCS routine is executed when the NA detects that the currently existing tasks are overloading its node. This step is processed on the node wholly locally. The purpose of this routine is to select the task (or set of tasks) that NA will attempt to migrate out and become stable (i.e. non-overloaded) during that process. All tasks currently running on this node are evaluated, taking into consideration various aspects, namely:

- The cost of running a task on this particular node. NA will aim to have the highest node score for its own node. If removing this particular task will cause its Allocation Score (AS) to be higher, then this task is more likely to be selected. AS is calculated by Service Allocation Score (SAS) functions – see section 6 for details.

- The cost of migration of a task – VM migrations cause disruptions on the Cloud system. In this research, cost is estimated by Live Migration Data Transfer formula [47] as the additional network traffic required to migrate the running VM instance to an alternative node.

- The likeness to find an alternative node – the majority of tasks do not have major constraints and can be executed on a wide range of nodes. However, there are a small number of tasks with very restrictive constraints that significantly limit the number of nodes that the task can be executed on. If such a task can only be executed locally, i.e. the node has enough total resources capacity and task constraints are matched, then NA is unlikely to migrate out those tasks.

- Any task which cannot be executed on a local node is compulsory selected as a candidate task. This scenario could occur if the task constraints or node attributes were updated.

NA first computes a list of compulsory candidate tasks, i.e. tasks that can no longer be executed on this node. Following this, if the remaining tasks are still overloading the node, it will select a subset of tasks to be migrated out.

The candidate tasks selection algorithm tries to minimise the total migration cost of selected tasks, and also to achieve the highest AS for a node, under the assumption that the selected subset of candidate tasks is successfully migrated to the alternative node. In order to achieve this, the algorithm defines the Fitness Function as coded inside SCS: Fitness Function = Node Allocation Score / Total Migration Cost.

For the above in a NP-Hard problem with a substantial search space, e.g. twenty tasks on a node, the search space size is over one million combinations. Given this, the Full Scan approach [47] will be substantially computation-intensive. Therefore, the use of metaheuristic algorithms is justified. In previously researched scheduling concept, a variant of TS has been successfully applied to solve a similar class of problems. The TS algorithm has the following properties:

- It has a small memory imprint since only the list of visited solutions is maintained thorough execution;

- It can be easily parallelised as a variant which is restarted multiple times;

- It is very controllable through setting up a limited number of steps and number of runs;

- It is stoppable, and the best-found result can be retrieved immediately.

- It generally returns good results.

It was found that multiple restarts (herein a twenty-five re-run limit) with a shallow limit of steps (herein five) yield very good results, with only about 2-7% of solutions in the whole search space (i.e. selecting a subset of tasks being run on a node) being examined in each invocation. Additionally, instead of restarting the algorithm an arbitrary number of times, a stop condition for this algorithm has been implemented when the best-found solution has not been improved in a certain number of the last steps (herein six).

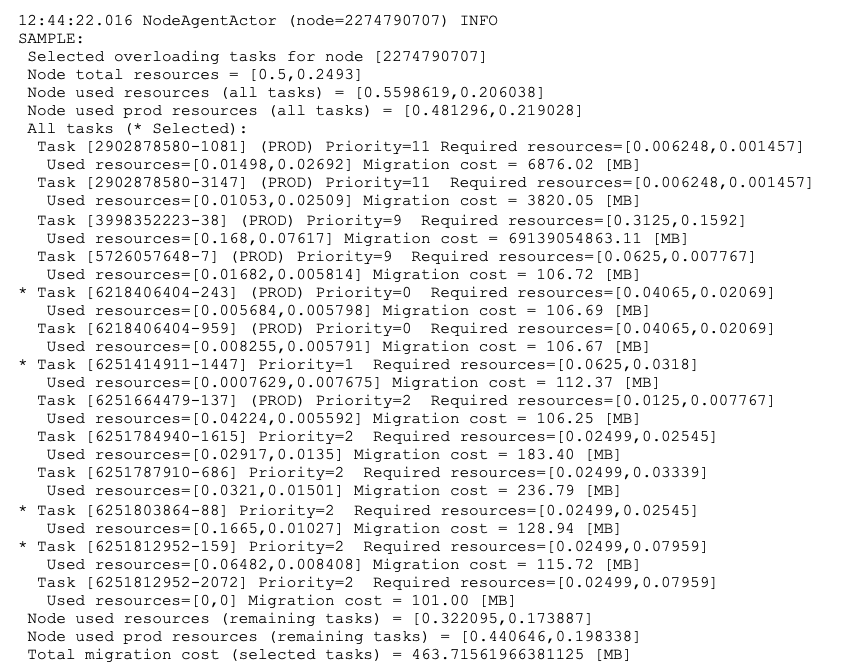

A sample log entry is presented below, wherein the subset of candidate tasks is being computed:

Here, the thirteen tasks are being executed on node ‘2274790707’. However, the used resources exceed the node’s total resources, i.e. all tasks are utilising 0.5598619 CPU, while the node can provide only 0.5 CPU (values are normalised). The node’s NA detects the node is overloaded and triggers the SCS routine. The SCS routine selects four tasks (here: the production task ‘6218406404-243’ and non-production tasks: ‘6251414911-1447’, ‘6251803864-88’ and ‘6251812952-159’; marked with *) which are then added to candidate tasks, and NA will attempt to migrate out this set in the next step.

The potential reduction of used resources is an effect of removing a subset of tasks from this node: (i) CPU reserved for production tasks is potentially reduced from 0.481296 to 0.440646 which is ca. 88% utilisation of total 0.5 CPU available on this node, and (ii) memory reserved for production tasks is potentially reduced from 0.219028 to 0.198338 which is ca. 80% utilisation of the total 0.2493 memory available on this node. The total migration cost for this set of migrations is ca. 463.72MB.

5.2.STEP 2: SELECT CANDIDATE NODES

After selecting candidate nodes, NA sends a GetCandidateNodes request to BA. A part of this request, task information data, such as currently used resources and constraints, are sent. BA also itself caches a list of all nodes in system with their available resources and attributes. Based on this information, BA prepares a list of alternative candidate nodes for a task in request. The main objective of this process is to find alternative nodes which have the potentially highest node AS, under the assumption that the task will be migrated to a scored node. The size of this list is limited to an arbitrary value to avoid network congestion when NA will send actual migration requests query in the next step. In this implementation, it is set to fifteen candidate nodes returned in each response.

This step is the most computing intensive of all, and represents a potential bottleneck for negotiating logic processing. BA needs to examine all system nodes, check their availability for a given task and score them accordingly. The request processing is self-contained and highly concurrent, meaning that the node scoring can be run in parallel and the final selection of top candidate nodes is run in sequence. Originally, this code was extensively profiled and improved, and designed BA to be able to run in a multi-instance mode if needed and to handle heavy usage. However, in experiments, the quoting mechanism proved to be very lightweight and the demand not that high, meaning that a single BA was sufficient to handle 12.5k nodes in the system.

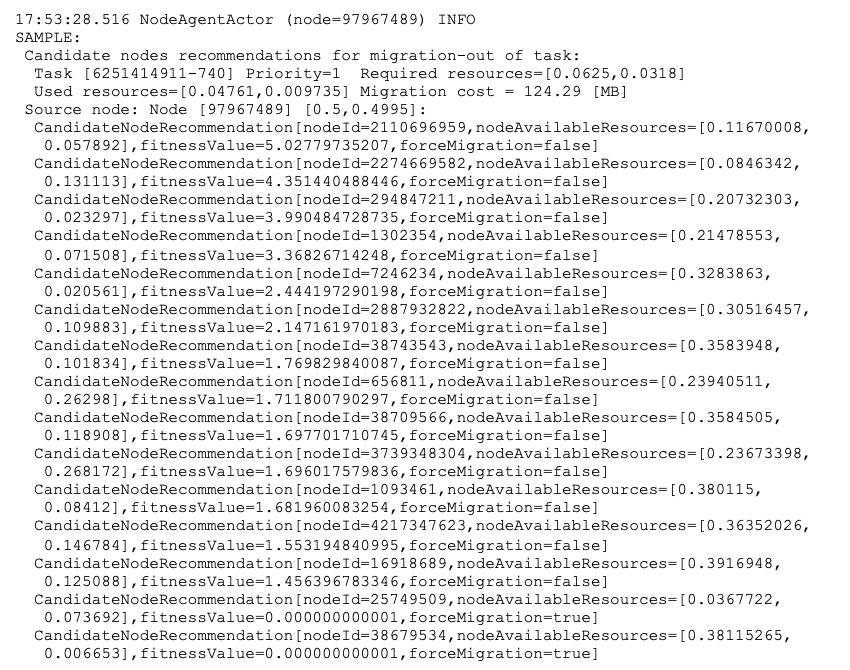

Below, a sample log entry is presented when such a list is computed and returned to a NA:

Here, NA on node ‘97967489’ requested candidate nodes for the migration of the task ‘6251414911-740’. BA returned top candidate nodes for a given task ordered by their suitability score, i.e. fitness value. Here values returned are: 5.02779735207 for node ‘2110696959’, 4.351440488446 for node ‘2274669582’, 3.990484728735 for node ‘294847211’, 3.36826714248 for node ‘1302354’, and so on. Additionally, the last recommendations for nodes ‘257495090’ and ‘38679534’ are forced-migrations (forceMigration is set to true).

Within the node recommendation there is additional information, such as node available resources and other metadata (not shown in listing). It is not necessary to return this extra information, but it was found to be very useful for logging and sampling purposes, and then efficient tuning of the system (for details see subsection 7.4).

5.3.STEP 3: SEND MIGRATION REQUESTS

Forced migration candidates will be always added to the list of accepted candidate nodes in the next step but with minimal scores. Each NA analyses its own node availability for a given task, i.e. both the available resources and the node’s attributes, and responds with TaskMigrationAcceptanceResponse or TaskMigrationRejectionResponse.

Acceptance response only implies the readiness to accept a task with NA not yet allocating any resources (the resources allocation is part of task migration request process as detailed in Step 5). Additionally, TaskMigrationAcceptanceResponse message contains this node’s current resources usage levels, which are used in the next step to rescore this node, since the data from BA are less recent.

5.4.STEP 4: SELECT TARGET NODE

NA waits for a defined time, or until all candidate nodes have responded by either the acceptance or rejection of a migrated task, and computes a list of nodes that accepted this task. NA evaluates each of the accepting nodes using the Service Re-allocation Score (SRAS) function, with the assumption that the task will be re-allocated to a scored node. From this pool, a target node is then selected. The selection is weighted with node scores but still randomised, which helps to avoid conflicts when many task migrations compete for the same node.

As noted above, all forced migration candidate nodes will be added to this list but will be selected only in last place, once all other alternative migrations attempts fail. This strategy ensures that NA always has an alternative node to offload the task. A scenario in which only one node is capable of running a given task is considered to be an error, and is reported to the system administrator. For fault-tolerance reasons, the system should always have multiple nodes able to run any given task.

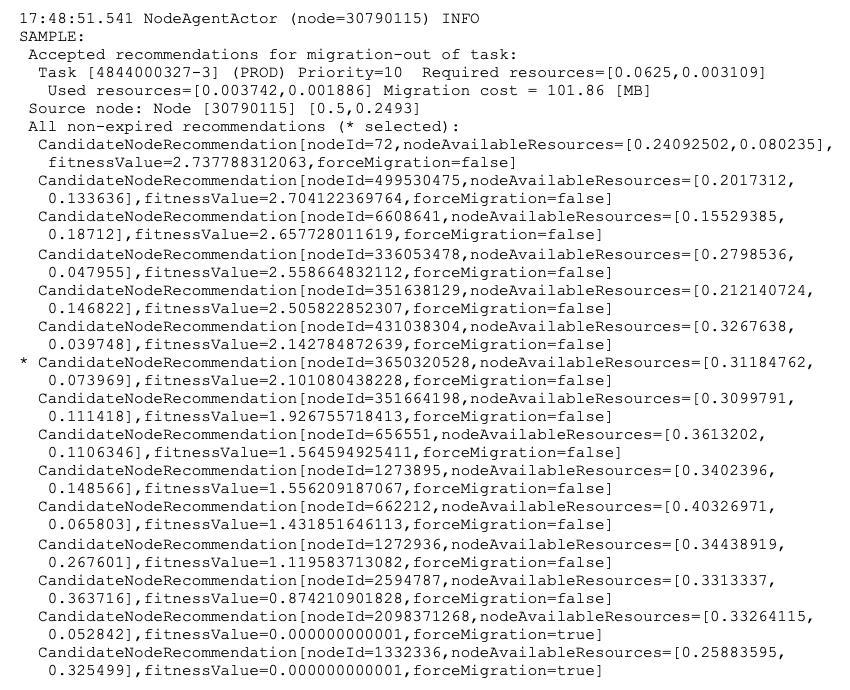

A sample log entry is presented below:

Here, NA on a node ‘30790115’ is selecting a target node for the migration of task ‘4844000327-3’ (with the migration cost of 101.86MB). All accepted recommendations from previous step (within thirty seconds) or forced recommendations (forceMigration is set to true) are re-scored and a single node is selected (here: node ‘3650320528’; marked with *). Then, NA sends TaskMigrationProcessRequest to initiate a task migration process itself. NA stores received candidate node recommendations in its memory in case the task migration fails, and the next target node has to be selected.

Once the task is removed from a node, meaning it is re-allocated, and has finished its execution, is killed or crashes, all its candidate node recommendations are automatically invalidated and deleted. Additionally, candidate node recommendations expire after an arbitrary defined time, in this case three minutes. This mechanism exists in order to remove recommendations with out-dated node data. If no candidate node recommendations are left (or expire), and the node is still overloaded, the SAN process restarts from Step 1.

5.5.STEP 5: MIGRATION PROCESS

Every NA is actively listening for coming migration requests. When NA receives TaskMigrationProcessRequest, it performs a final suitability check, wherein both node’s available resources and task constraints are validated. If the forced-migration flag is set, NA ignores the existing tasks and validates the required resources against total node resources. Occasionally, the target NA can reject task migration process or migration fails. In such a scenario the algorithm returns to Step 4 and selects the next candidate node (via weighted randomised selection).

In practice, this happens only for 6-8% of all task migration attempts (in simulated GCD workload), the majority being the result of task migration collisions where two or more tasks are being migrated to the same node. The first-to-arrive TaskMigrationProcessRequest is generally successful, meaning that Steps 4 and 5 are repeated only for the rejected migrations. There have been no observations of an increase in collisions when the larger Cloud system is simulated (up to 100k nodes, as detailed in section 7.10). This is because a single NA communicates with only a limited set of other agents, and the P2P communication model is used exclusively. This means that the communication overhead does not go up when the system size is increased.

5.6.FORCED MIGRATION

In rare circumstances, approximately 10-15 out of 10k tasks present constraints which restrict the execution of a task to a very limited number of nodes. Considering this, there is a scenario in which NA wants to migrate out a given task but is unable to find an alternative node because all suitable nodes have already been allocated to other tasks, and the majority of their resources have been utilised. In such a scenario, BA returns candidate node recommendations with a forced-migration flag set. In response, the BA can also mix non-forced migrations and forced migrations. In a worst-case scenario, all returned recommendations would be forced, but this approach ensures there is always an acceptable node to run a given task on. This prevents a starvation of the task resources, where the task is never executed.

A forced migration flag signals that a node is capable of executing a task but that its current resources utilisation levels do not allow it to allocate additional tasks, since this will cause the node to be overloaded. Forced migration forces the node to accept the task migration request while skipping the available resources check. However, task constraints are still validated, including the check if the node’s total resources are sufficient to run the task. This design helps to avoid a situation where a task has very limiting constraints and only a few nodes in the system can execute it. If those nodes have no available resources then it will not be possible to allocate a task to them, and therefore tasks will not run. As such, the nodes are forced to accept this task, which then many trigger the target node’s NA to migrate out some of its existing tasks to alternative nodes.

6.SERVICE ALLOCATION SCORE

SAS functions are a crucial part of the system, which greatly impacts global resource usage level. That is, they determine how well nodes’ resources are utilised. They are used when a new task is allocated or when a system needs to re-allocate an existing task to an alternative node.

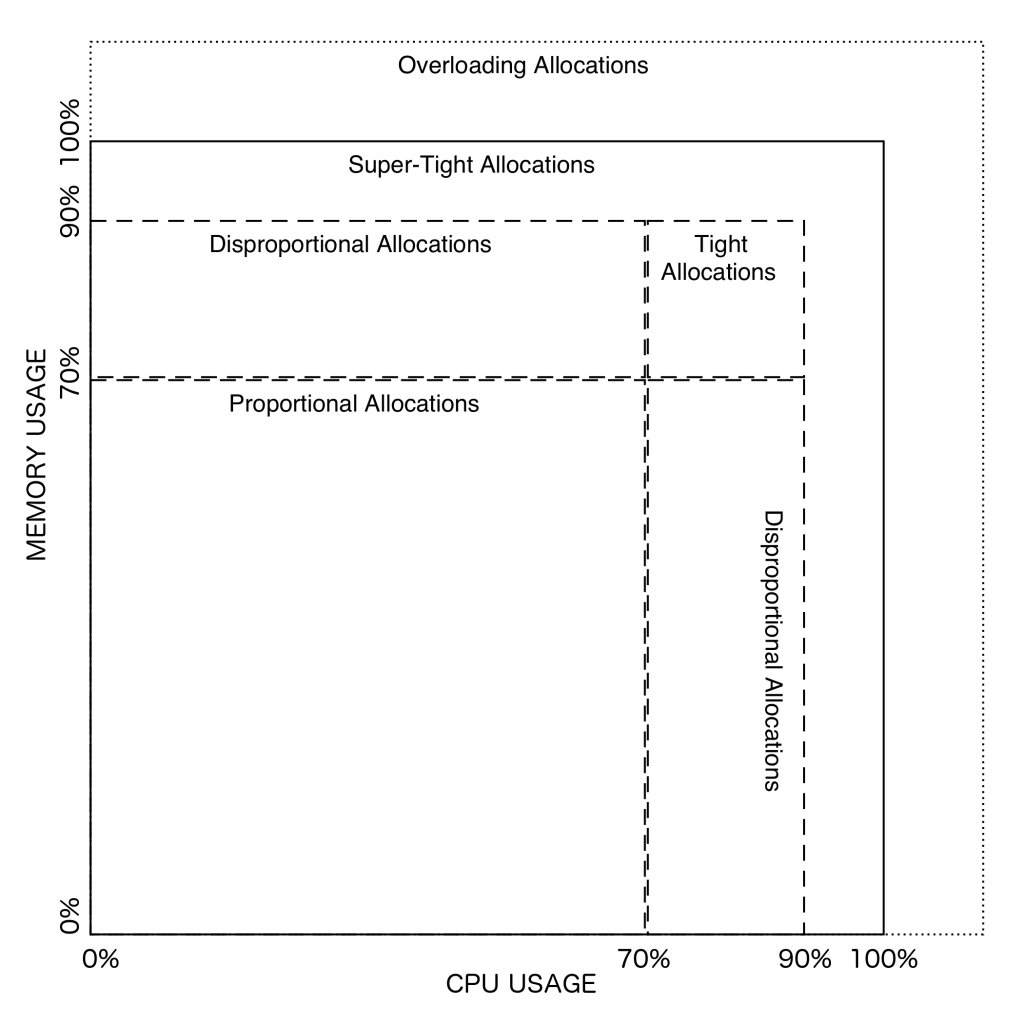

SAS functions evaluate how well a given task will fit a scored node system-wise by returning AS value. In this implementation, SAS input is constructed from the total node resources, the currently available node resources and the currently required resources for a given task. SAS function returns a value when a task fits the available resources on a node, and also when a node is overloaded by a task. If a node cannot fulfil a task’s constraints, the node is deemed non-suitable and the scoring function is undefined. This research concludes that node AS are failing in six separate areas:

- Idle Node – a completely idle node is a special case of allocation, in which no task has been allocated to this node. Such a node could be completely shut down, resulting in lower power usage for a cluster. In this research, idle nodes are scored most highly when determining a suitable node for initial task allocation.

- Super Tight Allocation (STA) – where some of the node’s resources are utilised in the 90%-100% range. STA is regarded as stable allocation; however, due to the dynamic resource usage, this is actually not a desirable scenario. Complete, or almost complete, resource usage can frequently lead to resource over-allocation, whereby one or more tasks increase their resource utilisation. This experimentation has determined that leaving 10% of any given resource unutilised gives the best results since it reduces task migration but still ensures the efficient use of the system resources (see discussion in subsection 6.4).

- Tight Allocation (TA) – where all node resources are utilised in the 70-90% range. This is the most desirable outcome as it promotes the best fitting allocation of tasks and, therefore, low resource wastage.

- Proportional Allocation (PA) – while tight-fit is the most desirable outcome, the majority of tasks in this research consumed a small amount of each resource. Most scheduled tasks are short batch jobs which have a very short execution time. In such a scenario, it is desirable to keep proportional resources’ usage ratios on all nodes which would, therefore, generally enable nodes to fit more tasks with ease.

- Disproportional Allocation (DA) – where the node’s resources are not proportionally utilised, thereby making it difficult to allocate additional tasks if required. For example, a setup where tasks on a node allocate 75% of CPU but only 20% of memory is not desirable.

- Overloaded Node – when allocated resources overload the total available resources on the node. Naturally, this is an unwanted situation, and such a node is given a score of zero.

Several types of resources exist which can be utilised by the task, such as memory, CPU cycles and disk I/O operations, and so on. The model also supports artificial resources, called ‘virtual resources’ and the number of defined resources is potentially unlimited. Figure 3 visualises AS types for the two resources (CPU and memory):

Figure 3. Allocation Score types

SAS function should never allow overloading allocations to take place in order to prevent a scored node to become overloaded and unstable. Additionally, during the research it was determined that STAs are very prone to over-allocate nodes and are damaging to overall system stability. Therefore, they are also accorded a score of zero. DAs increase global resource wastage and should be avoided; nevertheless, they are acceptable if none of the more desired types of AS are possible. The desirability order varies and depends on the task’s state, as discussed in subsections 6.2 and 6.3 below, while the following subsection introduces the concept of Service Allocation Lifecycle (SAL).

6.1.SERVICE ALLOCATION LIFECYCLE

Tightly fitting tasks on as few nodes as possible are beneficial for global system throughput. However, during this research the following facts were observed:

- Initially, a Cloud user specifies the task’s required resources. Users tend to overestimate the amount of resources required, wasting in some cases close to 98% of the requested resource [33]. Therefore, only after the task is executed could realistic resource utilisation values be expected. Allocating new tasks in a tight-fit way (i.e. TA and STA areas in Figure 3) does result in turmoil when the task is actually executed and the exact resource usages levels are logged. Therefore, the initial allocation should rather aim to distribute tasks across nodes and keep the resource utilisation levels on individual nodes low (i.e. PA area in Figure 3), than pile them on the lowest possible number of nodes.

- In GCD, only about 20-40% of tasks qualify as long-running tasks, meaning that they run for longer than twenty minutes [44]. The remaining scheduled tasks consisted of short-term jobs which generally have much lower resource requirements than long-running tasks. The majority of tasks are short and will not exist for long at all in the system. Therefore, it is important for an initial allocation not to spend too much time in trying to tightly fit them into available nodes.

- While the majority of tasks are short-lived (up to twenty minutes), there exists a number of long-running tasks that have more demanding resource requirements, meaning that the majority of resources (55–80%) are allocated to long-lived services (ibid.). Therefore, it is more difficult to fit them into nodes, and these allocations should be much tighter to minimise global system resource waste.

Given the above reasons, the ideal scenario for a task is to be initially allocated on a lowly-utilised node, before it is gradually migrated towards more tightly-fitted allocations with other tasks.

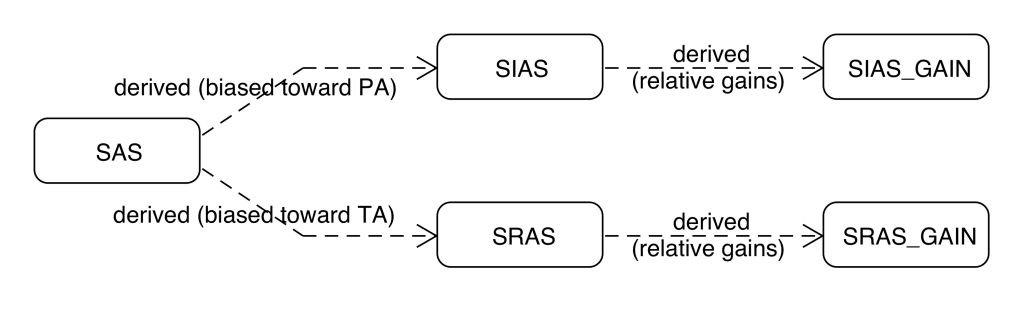

Originally, the MASB framework did not have distinct scoring functions for Service Initial Allocation Score (SIAS) and SRAS; a single SAS function, with the same scoring model as SRAS, was used for all allocations which resulted in lowered performance. The design was ultimately altered, and SAS function was split:

- During Initial Allocation, a randomly selected BA is responsible for allocating a newly arrived task to a worker node. BA uses SIAS function (detailed in subsection 6.2) to score nodes. Only a limited number of candidate node recommendations are calculated (here: 200) before selecting the top recommendations. This is to prevent scoring routine calculations from processing for too long. The limit of 200 applies only to non-forced recommendations for matching nodes.

- A once allocated (and running) task can be re-allocated to an alternative node if necessary. In such a scenario NA of a node which the task is being executed is responsible for finding a candidate node. Both NA and BA use SRAS function (detailed in subsection 6.3) to score candidate nodes. Similar to calculating recommendations for new tasks, as an additional optimisation, only a limited number of candidate node recommendations are calculated before selecting the top recommendations. However, because this routine is invoked much less frequently, two thousand nodes are analysed. The two thousand limit applies only to non-forced recommendations for matching nodes.

MASB uses a network of BAs to provide a set of the best candidate nodes (nodes with the highest AS) to allocate the task. However, some applications such as Big Data frameworks often send multiples of an identical task in a batch. Those tasks execute the same program and have the same (or very similar) resource requirements. As such, a limited set of nodes will be highly scored and may result in a multiple repeated allocations requests to the same node over a very short period of time. To prevent this phenomenon, the pool of candidate nodes is randomly shuffled each time BA receives a request.

Figure 4. Service Allocation Lifecycle

6.2.SERVICE INITIAL ALLOCATION SCORE

As explained in the subsection above, in order to minimise the impact of Cluster user’s overestimating resource requirements, the initial allocation should attempt to spread tasks widely across all system nodes. Therefore, when initially allocating existing tasks, candidate nodes should be scored in the following order: PA, TA and finally DA.

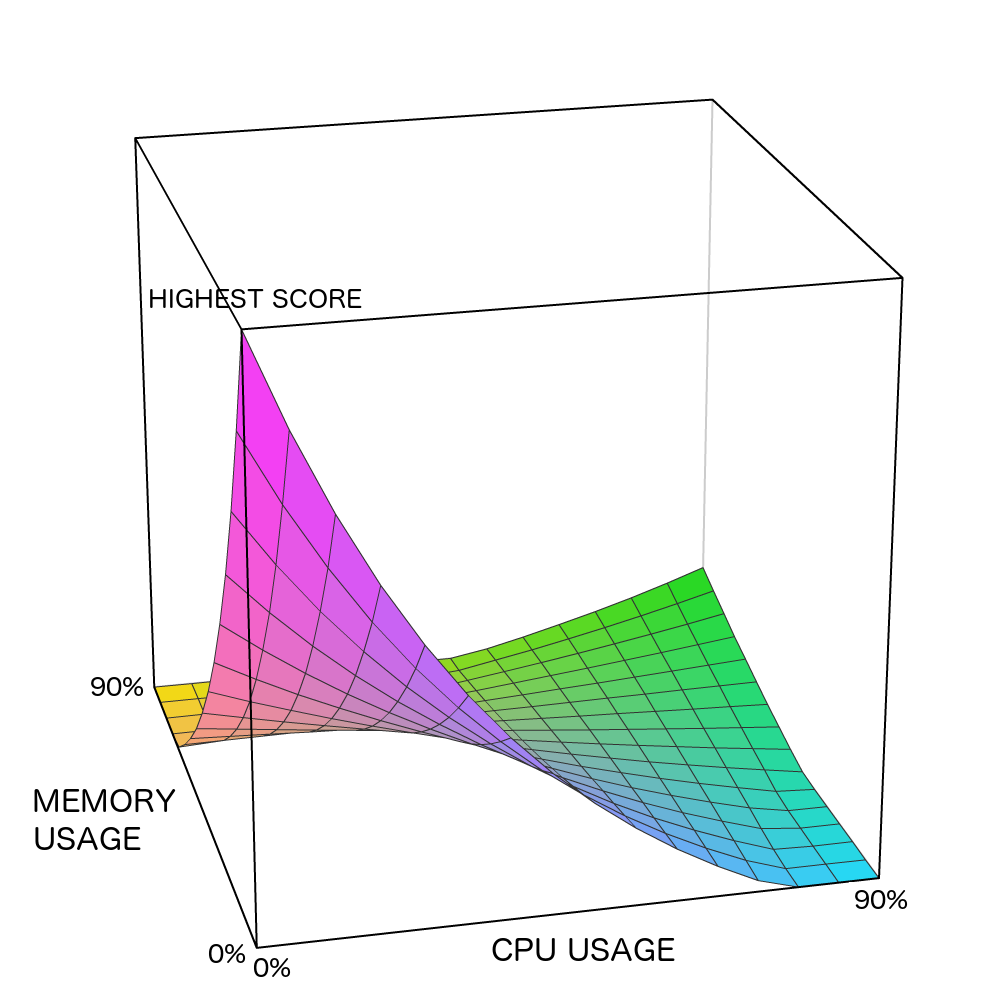

In this implementation, the SIAS function for two resource types (CPU and memory) was used. Figure 5 is a graphical representation of SIAS function:

Three separate areas can be noticed:

- Lower-left (the highest score) – this promotes PA, which will leave resource utilisation at a low level or proportionately used.

- Upper-right corner (the medium score) – this promotes TA, where tasks on this node will closely utilise all its resources.

- The upper-left and lower-right corners (the lowest score) – these DAs will leave one resource utilised almost fully and the other resource wasted.

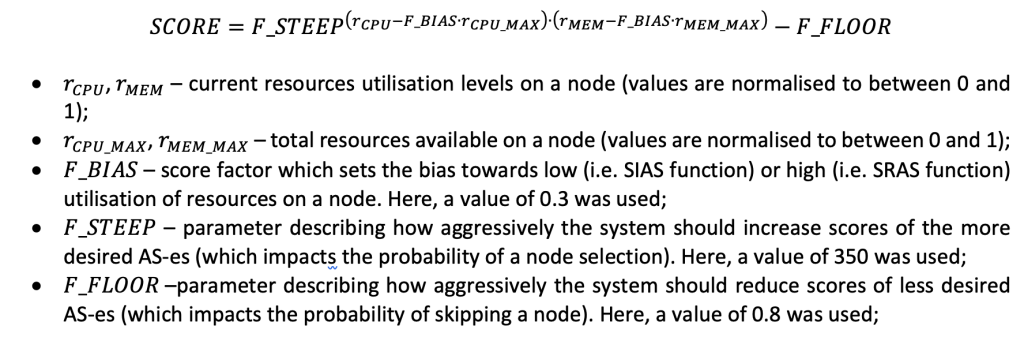

It should be noted that the maximum resource usage is 90%, and that values above this level are in an undesired STA’s area (and have zero AS). The following SIAS function was used:

Additionally, negative score values are adjusted to zero (to prevent the selection of a node). It should be noted that the SIAS is calculated exclusively from user-defined resource requirements since the actually-used resource requirements are unknown before the task execution actually starts.

6.3.SERVICE RE-ALLOCATION SCORE

This research has found that the best throughput results are achieved when tasks are packed tightly into available nodes, i.e. where global resource utilisation is the highest. The best fit scenario, where the task fully utilises 90% of all available resources on a node, is scored the highest. Therefore, when migrating existing tasks, candidate nodes should be scored in the following order: TA, PA, then DA.

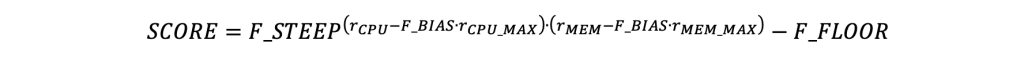

Like the SIAS function presented in 6.2, the SRAS function for two resource types (CPU and memory) was used. Figure 6 is a graphical representation of SRAS function:

Three separate areas can be noticed:

- Upper-right corner (the highest score) – this promotes TA, where tasks on this node will closely utilise all its resources.

- Lower-left (the medium score) – this promotes PA that will leave resource utilisation at a low level or proportionately used.

- The upper-left and lower-right corners (the lowest score) – these DAs will leave one resource utilised almost fully and the other resource wasted.

In this implementation, the following SRAS was used:

with the exceptions of where a value of 500 was used and where a value of 0.6 was used; the parameter definitions are the same as in SIAS function in subsection 6.2)

As can be observed visually, SRAS is a mirror image to the SIAS function (presented in Figure 5). The main difference is changing the score bias (i.e. parameter) which shifts the peak score point from (0,0) to (90,90) (percentage of utilised resources), and which relates to the change in the most desirable AS from PA to TA.

It should be noted that the SRAS is calculated exclusively from actually-allocated resource requirements. User-defined resource requirements are evaluated as part of the Resource Usage Spikes (RUS) routine, explained in detail below.

6.4.RESOURCE USAGE SPIKES

Occasionally, a task might instantly increase its resource usage as the result of sudden increase of a demand for a task; at such times, a node should have the capacity to immediately accommodate this request, without needing to migrate the task to an alternative node (since this takes time). In such a situation, other VMs running on this machine can be paused or killed to let the VM instance executing this task instantly allocate more resources.

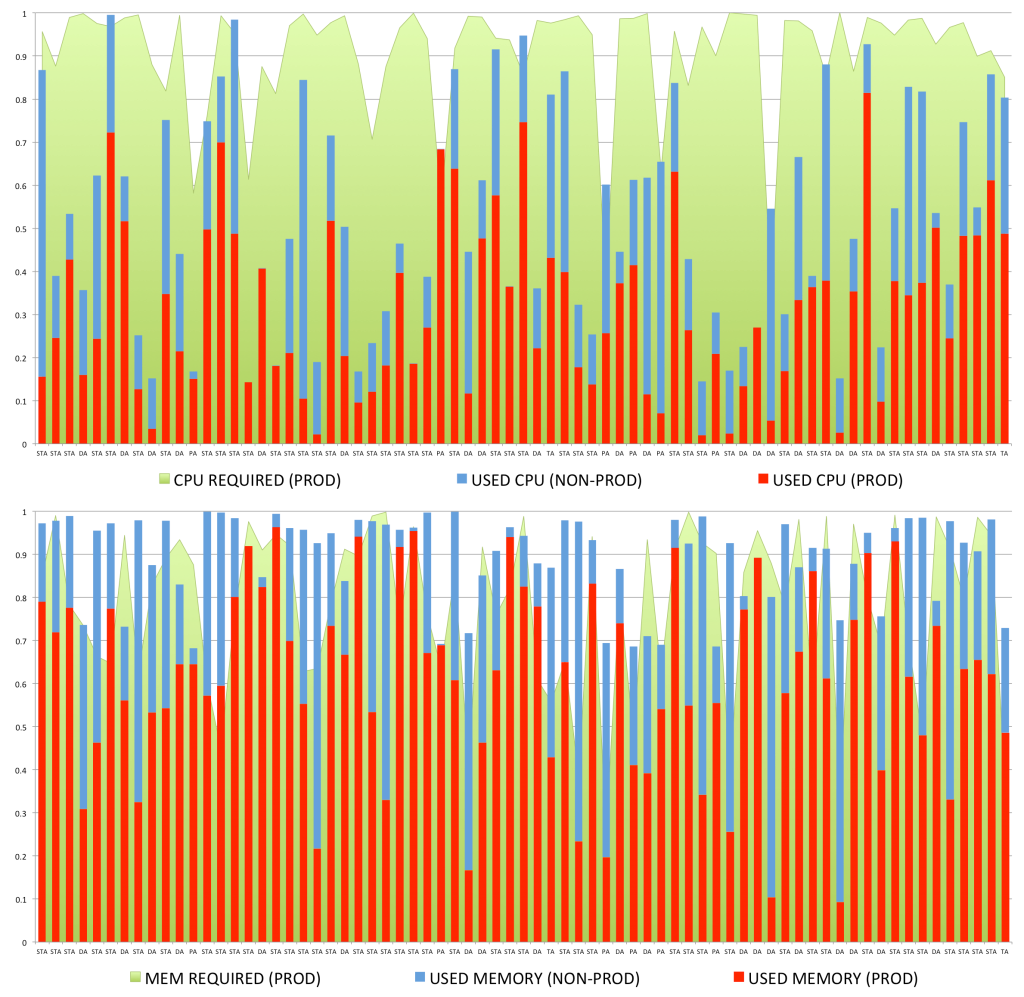

Figure 7. Production vs. non-production allocated resources

As such, an additional feature was implemented in MASB to handle RUS. Aside from checking the actually-used resources for tasks and ensuring that the node has the capacity to support it, the system also calculates the maximum possible resource usage of all production tasks based on user-defined resource requirements, as well as making sure that the node has the capacity to support all production tasks at their full resource utilisation. This constraint is limited only to production jobs since VMs running non-production jobs can be suspended without disturbing business operations.

The introduction of RUS constraint adds another dimension to the tasks allocations’ logic. Figure 7 visualises how user-defined resource requirements for production tasks and actually-used resources for all tasks are integrated. In this 60-node sample (a single bar represents one node), approximately half the nodes have a very high CPU user-defined allocation for production tasks, while the real usage is much lower. It should be noted that while memory usage stays proportionally high thorough the GCD workload, the gaps between the requested and the actually-used memory are much smaller. This is a relatively common pattern for GCD workload.

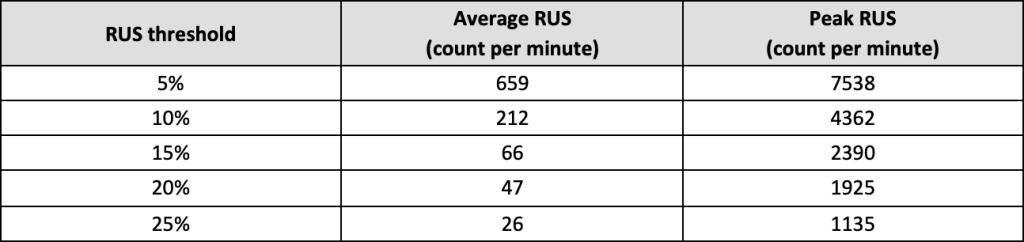

Whilst RUS do not occur frequently, they do have the significant potential to destabilise an affected node. Table 2 represents the average frequency of RUS in examined GCD workload traces with ca. 12.5k nodes and ca. 140k tasks being continuously executed by them (with different RUS thresholds examined):

Table 2. Resource Usage Spike frequencies

Here, while running a simulation based on replaying the original Borg’s allocation decisions (as detailed in subsection 7.7), the RUS threshold of 10%, i.e. whenever there was a greater than 10% increase in the overall node resource utilisations levels in any of the monitored resources, was breached 212 times per minute on average, with a peak of 4362 breaches.

In this research, a threshold of 10% was selected for the experimental simulations as an overall good balance between efficiently allocating nodes’ resources and, at the same time, leaving the running tasks enough headroom for occasional activity spikes. Generally, lower thresholds resulted in many task migrations (and thus incurred additional task migration costs), and the thresholds above 10% were not utilising resources effectively (the system throughput was lowered). Consequently, the SAS functions were tuned to allocate up to 90% of all available resources on the node (as seen in Figure 3) which seem to give the best overall results.

RUS are a significant design consideration, and a misconfiguration might lead to multiple premature terminations of the tasks and suboptimal performance of the system. Google’s engineers implemented a custom resource reservation strategy using a variant of step moving average, as detailed by John Wilkes in a presentation during the GOTO 2016 conference in Berlin [55].

7.EXPERIMENTAL RESULTS

The previously developed AGOCS framework was used as the base of the experimental simulation. AGOCS is a very detailed simulator which provides a multiple of parameters and logical constraints for simulated jobs. The scope of the available variables is very broad, including memory page cache hit and instructions per CPU cycle; however, in this project simulations were based on the following assumptions:

- Requested (by user) and realistic (monitored) resources’ utilisation levels for memory and CPU;

- Detailed timing of incoming tasks and any changes in available nodes (within one-minute cycles);

- Nodes attributes and attributes’ constraints defined for tasks (as specified in GCD workload traces).

This level of detail comes at the price of extensive computing power requirements. While dry simulation itself can run on a typical desktop machine, adding layers of scheduling logic, agents’ states and inter-system communication requires a significant increase in processing time. In order to realistically and correctly simulate scheduling processes on a Cloud system, the Westminster University HPC Cluster was used.

7.1.TEST ENVIRONMENT AND CODE PROFILING

The MASB prototype was initially developed on a personal desktop, but as the size and level of detail of the simulations grew, it was necessary to move to a Cluster environment where more computing power was available.

In experiments, MASB allocated all available forty CPU cores on HPC machine and used them continuously at 60% to 80%. The MASB process allocated ca. 7GB of memory. It is difficult to measure exactly how much computing power was spent on supporting activities such as simulating messaging interactions between agents, i.e. enqueuing and dequeuing messages to and from Akka actors. However, after tuning exercises of the default configuration, the Akka Actors framework proved to be quite resilient. It is estimated that the framework’s processing did not take up more than 10-15% of the total CPU time, with the relatively lightweight AGOCS simulator framework consuming about 15-25% of all CPU time. As an interesting note, Akka’s optional Thread-pool executor performed noticeably better on the test HPC machines than on the default Fork-join-pool executor, which is based on a work-stealing pattern.

However, in a truly multi-core environment, a different approach was required – one which focused on minimising context switches frequency and average CPU idle time across all available cores. Once the MASB framework was moved into the Cluster environment, the ‘pidstat’ command tool was used to gather statistics, before the refactor and fine-tune framework so as to achieve better parallelism. During MASB simulations, the typical observed context switches frequency was ca. 500-700 per second per thread, which is comparable with a fully loaded webserver [29].

Interestingly, Google’s BorgMaster process, which manages a single cell in the production environment for one computing cell, uses 10–14 CPU cores and up to 50GB of memory. The statistics presented are valid for an intensely utilised computing cell, for example one which completes more than 10k tasks per minute on average [51].

7.2.TESTABLE DESIGN

Building a framework which fully simulates the Google computing cell from GCD traces has been previously recognised as a challenging task, where there are many aspects to consider [2][45][64]. GCD traces contain details of nodes, including their resources, attributes and historical changes in their values. Traces also contain corresponding parameters for tasks, such as user-defined and actually-used resources, as well as attributes’ constraints. This has created a multi-dimensional domain with a range of relations which has resulted in complex error-prone implementation. In order to mitigate the risk of coding errors, especially during rapid iterations, a number of programming practices were used:

- A comprehensive test units suite was developed, along with prototype code. Test units were executed upon every build to catch errors before being deployed to production. This software engineering pattern allowed for a rapid development of prototype and helped to maintain the high code quality;

- A number of sanity checks were built into the runtime logic, such as checking whether the task’s constraints could be matched to any node’s attributes within the system and checking whether the total of all scheduled tasks’ resources exceeded the computing cell compatibilities;

- Recoverable logic flow was implemented for both NA and BA. In the case of various errors such as division by zero or null pointer exceptions, the error is logged but the agent continues to run;

- Keeping a separate error log file with the output of all warnings and errors was a considerable help in terms of resolving bugs.

The implementation of the above features gave high confidence in terms of realising a good quality and reasonably bug-free code.

7.3.PLATFORM OUTPUTS

Adding detailed logging features to MASB has proved surprisingly difficult. Due to the highly parallel nature of the simulated Cloud environment, an enormous number of log messages were generated upon each simulation, making it difficult to analyse the behaviour of tested algorithms. In addition, writing and flushing log streams caused pauses in simulation. Switching to a Logback framework designed with a focus on concurrent writes provided a solution to this problem, although it was necessary to split the data into distinct log files in order to improve readability, e.g. separate errors from algorithms’ output data. The following outputs were used:

- Logging – in order to fine-tune MASB, excessive logging routines were implemented. All messages, counters and errors are logged to four types of log-files: /logs/*.log files – standard log outputs containing all logs messages and also samples; /logs/*-error.log – errors and corrupted data exceptions are written to separate files to help with debugging and troubleshooting; /logs/*-ticks.csv – CSV files with periodically generated overall system stats, such as the number of idle and overloaded nodes, number of migration attempts, global resources-allocation ratio, and so on; /usage/*.csv – detailed node usage stats and task allocations are written periodically to a file, that is, every hundred minutes of simulation time.

- Sampling – while examining every decision process in MASB simulation is virtually impossible, frequent and recurrent analysis of the details and values was useful for fine-tuning the system and the scoring functions. Not all the details of every single decision process were logged, rather just a small percentage of all invocations. In the current implementation, the following items are sampled: (i) the selection of overloading tasks by the NA, ca. 1 sample per 50 invocations (a sample is presented in 5.1); (ii) the scoring and selection of candidate nodes by the BA, ca. 1 sample per 5k invocations (see log entry in 5.2) and (iii) the selection of the target node from the candidate node list, ca. 1 sample per 5k invocations (as listed in subsection 5.4).

Sampling proved to be one of the most important logging features implemented.

7.4.SYSTEM EVOLUTIONS AND OPTIMISATIONS

In order to achieve high resources utilisation and low resources waste, several enhancements were implemented and then fine-tuned, including:

- Limiting the number of candidate nodes returned from BA to fifteen, and introducing the forced migrations feature (subsection 5.6);

- Fine-tuning SCS routine to maintain the balance between migration cost and the node allocation score, which refers to finding the right combination of steps of the TS algorithm, as well as its termination depth;

- Splitting the SAS function into SIAS and SRAS and then limiting the number of candidate nodes examined in those functions (200 and 2k respectively);

- Adjusting input parameters for SIAS and SRAS functions, namely values for , and for the best results based on samples logged (subsections 6.2 and 6.3);

- Adding the timestamp parameter to the candidate node recommendations, and regularly removing those which have expired. In scenarios where the task migration request is repeatedly refused, this mechanism forces NA to disregard the results of old calculations and request newly scored recommendations from BAs. In this implementation, the recommendation’s age threshold was set to three minutes (simulation time) with lower values not yielding better results (see subsection 5.4).

7.5.TEST SIMULATIONS SETUP

During the later stages of the development of the MASB prototype, several simulations were continuously run. They were frequently paused, tuned and then resumed to see whether a given tweak would improves the results. This methodology allowed the research to progress at a good speed while simultaneously iterating a number of ideas and tweaks. Therefore, the testing process did not have noticeable stages, but instead the stages blended into each other. This said, it is possible to logically split the testing into four main areas:

- Benchmarking – GCD workload traces also contain actual Google’s Borg scheduler task allocations. In the Borg’s simulation, MASB will replay all recorded events, mirroring tasks allocations as per the Google scheduler, i.e. not using its own scheduling logic. This simulation was used as a controlling run in order to test the system, and also as a benchmark to compare results with the original allocations.

- Throughput – secondly, MASB was tested to identify whether it was capable of allocating the same workload as Borg system. The size of the workload was then increased gradually in 2% steps while preserving the configuration of the system nodes. To ensure the correctness of results, another technique, called ‘cell compaction’ [51] was used in which, instead of adding additional tasks, the system nodes were removed. The results were then compared to the original GCD workload.

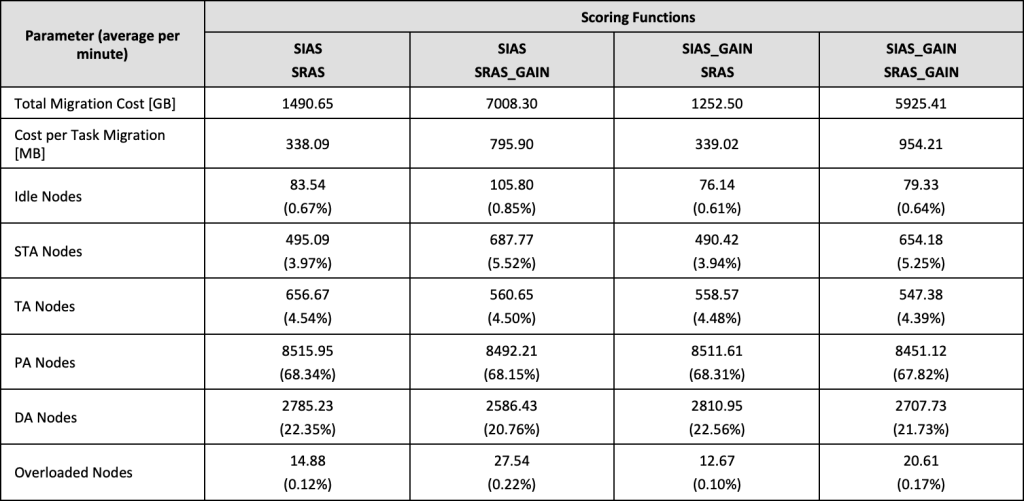

- Migration Cost – thirdly, this batch of experiments focused on migration costs incurred via use of VM-LM. A collection of different SAS functions and their variants were tried in order to research their impact on total migration cost while allocating the given workload.

- Scalability – finally, the MASB simulation was run with multiplies of GCD workload in order to test the scalability limits of the designed solution. Although this step was the least work-intensive, it took the longest time to perform.

As noted in [64], simulating GCD workload is not a trivial task. The main challenge when running such large and complex simulations is the demand for computation power and the continuous processing. During this experiment, the AGOCS framework was modified to also allow the testing of computing cells larger than 12.5k. This was achieved by duplicating randomly selected existing tasks and their events, for example ‘Create Task A event from GCD workload trace files’ will create events AddTaskWorkloadEvent events for task A and A’. This feature is based on the hashcode of object’s ID, which is a constant value.

The largest experiments simulated a single Cloud computing cell with 100k nodes and required nine months of uninterrupted processing on one of the University of Westminster HPC cluster’s nodes. At this juncture, it should be noted that early simulations often fail due to unforeseen circumstances, such as NAS detachment or network failure. One solution to this was to frequently save snapshots of the state of the simulation and to keep a number of previous snapshots in case of write file failure.

7.6.ALLOCATION SCORE RATIOS

Clearly, when examining the suitability of load balancing, the key parameter is the number of overloaded nodes, which should be kept to minimum. It was found that replaying GCD traces using Google’s original Borg’s allocation decisions results in up to 0.5% of nodes being overloaded in a simulated one-minute period. It was assumed that this phenomenon was the result of delayed and compacted resource usage statistics, which were recorded and averaged over ten-minute periods. As such, in further experiments this ratio was used as an acceptable error margin.

The second researched property was how nodes were distributed amongst allocation score types during simulations. Therefore, each experiment recorded a number of nodes with each allocation score type, and averaged them out over the simulation period. The set of normalised values for STA, TA, PA and DA are referred to as Allocation Score Ratios (ASR). Idle Nodes and Overloaded Nodes are discussed separately, and they are excluded from the ASR. The ASR values describe how well the Cluster is balanced, that is, how well nodes are balanced as a whole group.

The ASR values are used to describe the experimental results presented in the subsections below to highlight the differences in how various load balancing strategies perform under a GCD workload.

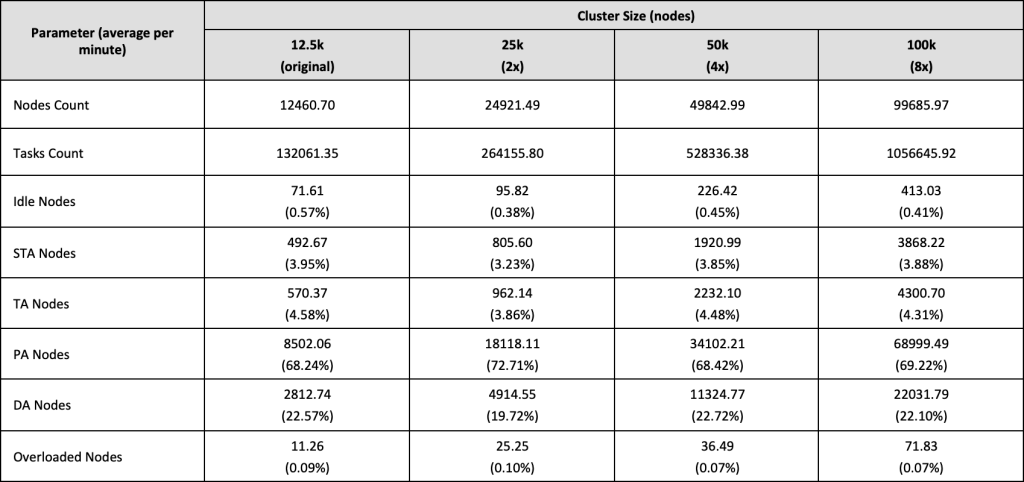

The most dominant AS was PA, meaning that each of the node’s resources is utilised between 0% and 70%. Ca. 68% of all the cluster’s nodes are found within these parameters, which is the direct result of their initial allocation using SIAS function. The second biggest group, ca. 22% of all servers, are nodes allocated disproportionally in which one or more resources are highly used but the other resources are relatively idle. The remainder of the nodes have either an STA or TA allocation score type. The PA to DA ratio of roughly 3:1 is characteristic for a typical workload as recorded in GCD traces and processed by MASB.

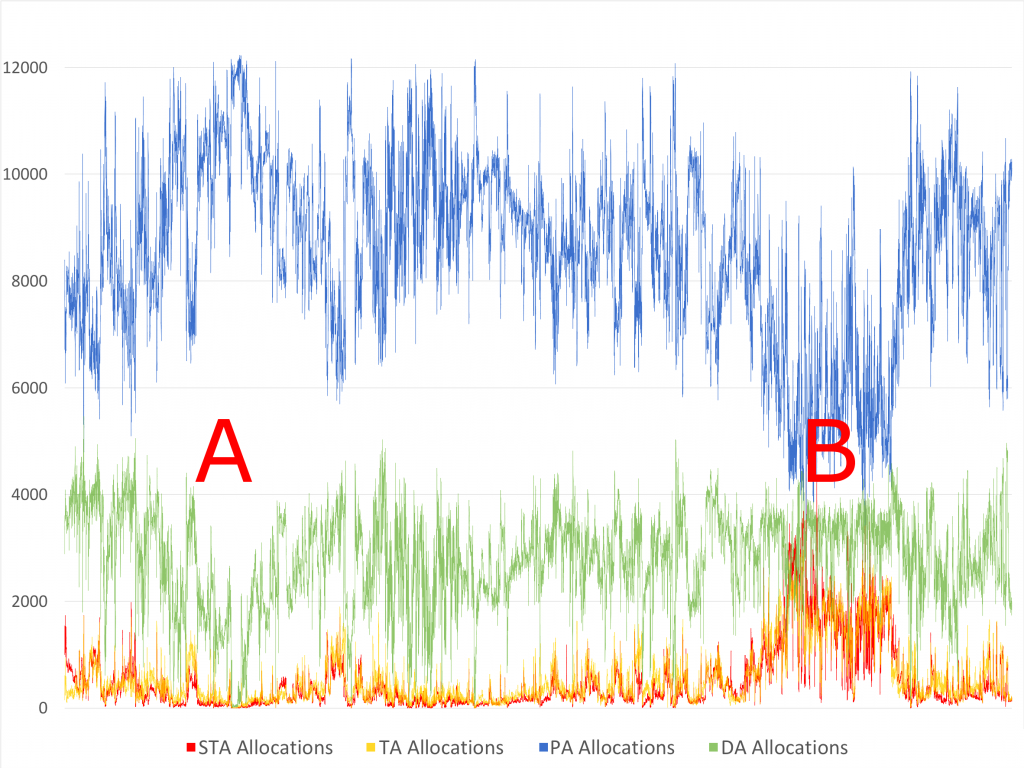

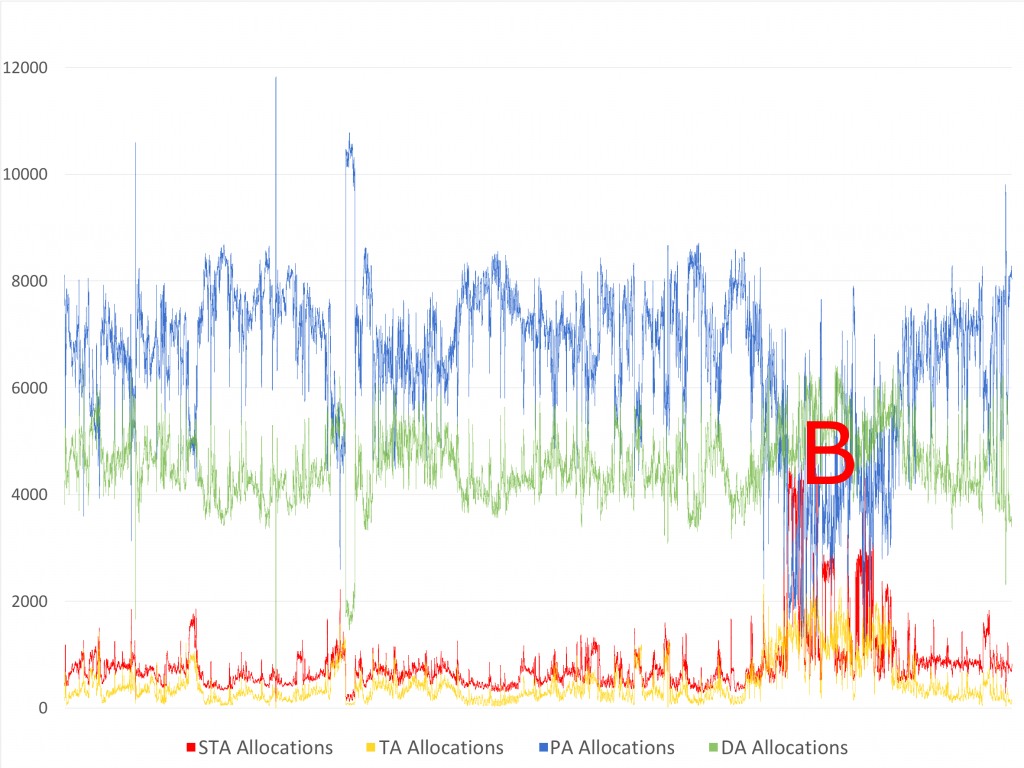

Figure 9 chart visualises the AS distribution during a month-long simulation. The horizontal axis is the measure of time and the vertical axis represents the number of nodes having a particular allocation type (as per coloured legend). The chart also highlights two periods of low and elevated workload, marked A and B respectively:

- During the low workload period (A), SIAS function can schedule most newly-arriving tasks to relatively unused nodes, thereby successfully preserving their resource usage proportions. As such, the number of PAs increases while the number of DAs decreases. Existing long-running services continue to run uninterrupted on their nodes, and so the ratio of STA to TA remains flat.

- During an elevated workload period (B), SIAS function is unable to find relatively unused nodes anymore. It thus selects lower quality allocations, resulting in a decrease in PAs. Due to the scarcity of resources, tasks are also re-allocated more frequently by SRAS function. This results in tighter fit allocations, which is seen as an increase in STAs and TAs counts.

This cycle is repeated thorough cluster activity, wherein MASB balances the workload. The subsections which follow describe several implemented optimisations and their rationales, as well as the experimental results and a commentary on them.

Figure 8. MASB – Allocation Scores distribution (12.5k nodes)

7.7.BENCHMARK

Given that GCD traces have a complicated structure and contain a vast amount of data, only rarely are they analysed to the full extent of their complexity. MASB design shares similarities with BorgMaster in areas such as constraining tasks, defining memory and CPU cores as resources, using scoring functions for candidate node selection, and handling RUS. It also closely follows the lifecycle of tasks as presented in [19]. As things stand, there is no publicly available literature which contains descriptions of similar experiments which could be compared with the simulation results of MASB. Therefore, the closest comparable results are the original Borg’s allocation decisions that were recorded in GCD traces. For the purposes of this research, it was decided that they be used as a benchmark for the results from MASB’s experiments.

Both simulations processed full month-long GCD traces. The average values were used because MASB simulation works in one-minute intervals whilst GCD traces provide usage statistics in ten-minute windows that occasionally overlap. Given this, peak or median values were not accurate.

To highlight differences in workings between the MASB and Google Borg algorithms, Figure 9 presents the AS distribution during the period recorded in GCD (replayed Google’s Borg allocation events). In comparison to the experimental data presented in Figure 8, MASB behaves more organically during periods of low and elevated workload. This is especially visible during the period of elevated workload (B) where MASB managed to preserve a better ratio of PA to DA Nodes than Google’s Borg. This behaviour is the result of allowing a given task to be re-allocated during its execution, meaning that MASB can dynamically shape its workload and improve the health of its allocations. This feature also allows greater flexibility in altering the requirements of running tasks, in which the load balancer attempts to offload an alternative node.

Figure 9. Borg – Allocation Scores distribution (12.5k nodes)

Table 3 directly compares ASR parameters of both pre-recorded Google’s Borg and MASB simulations:

Table 3. Benchmark results – Borg and MASB

The listed ASR values highlight the differences in Borg and MASB workings:

- Idle Nodes – Borg’s design has a definite advantage over MASB because Borg’s schedulers can access the shared cluster’s state and iterate over the complete set of system nodes. MASB relies on a network of BAs, each of which has only partial information about the cluster’s state. Therefore, a subset of idle nodes might never be scored, even if they represent the best allocation for a given task.

- STA and TA Nodes – in both systems, under normal workload conditions, incoming tasks are reasonably well distributed between the nodes. Only ca. 10% of all system nodes register higher resource usage scores, when at least one of resource utilisation levels crosses 90%. The exact scoring algorithm of Google’s Borg has not been disclosed, but the results suggest a degree of similarity to the SIAS function.

- PA and DA Nodes – the ratio of PAs to DAs is visibly different in Borg and MASB. Borg’s original scheduling decisions had a ratio of roughly 3:2, meaning that for every three proportionally allocated nodes in the system, there were two nodes that were disproportionately allocated. MASB managed to achieve a better ratio of 3:1, suggesting that the use of SIAS and SRAS scoring functions together with VM-LM feature can potentially create a more balanced scheduling system.

Given the superior ratio of PA to DA nodes as measured, and the possibility of increased throughput, the next experiment focused on processing increased workload.

7.8.THROUGHPUT TESTS

The MASB framework has been designed as a general solution for balancing workload in a decentralised computing system. After numerous iterations, MASB was eventually able to schedule the entire GCD workload, with additional tasks also added.

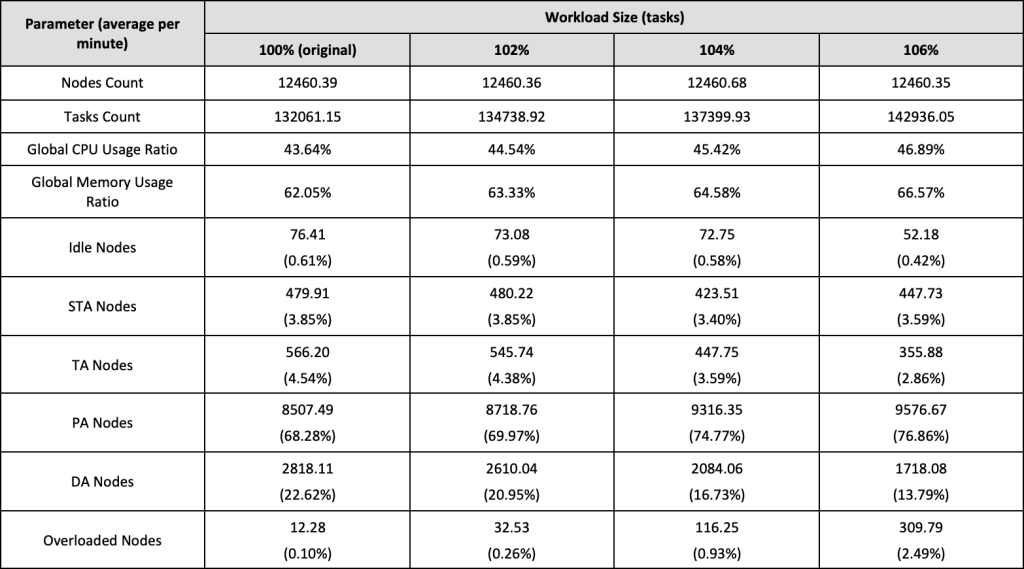

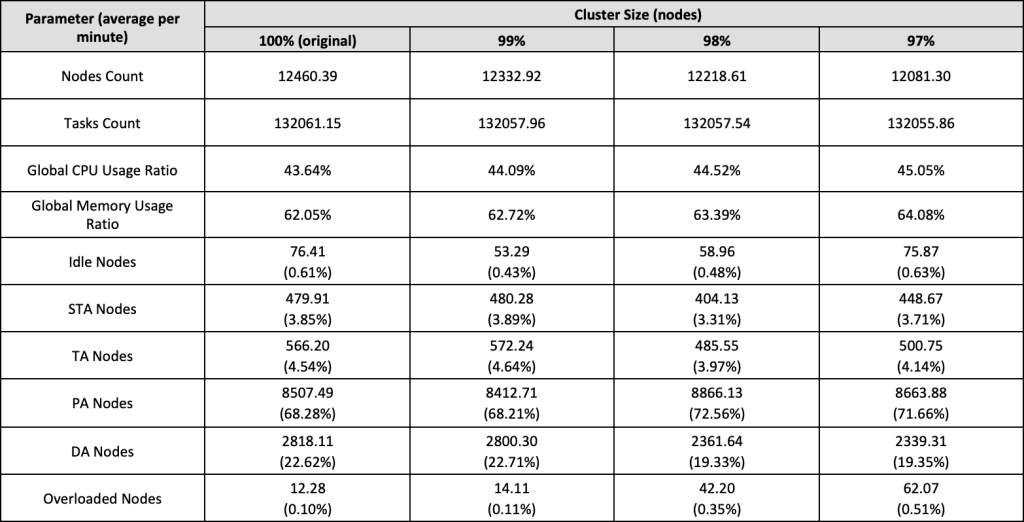

Table 4 presents a comparison of the results with different workload sizes:

Table 4. Throughput results (100%-106% workload)

As demonstrated above, MASB was able to schedule, on average, an additional ca. 2.6k tasks per minute (ca. 2% more tasks). Further tuning was unable to improve those results, with workload sizes greater than 102% increasing the number of overloaded nodes above the defined threshold of 0.5%.